AI in eDiscovery has been a hot topic for some time now, and with generative AI emerging, the topic is only going to get hotter. While there is no argument that AI makes eDiscovery faster and more cost efficient, it’s important to remember that the basics of the eDiscovery process are still crucially important.

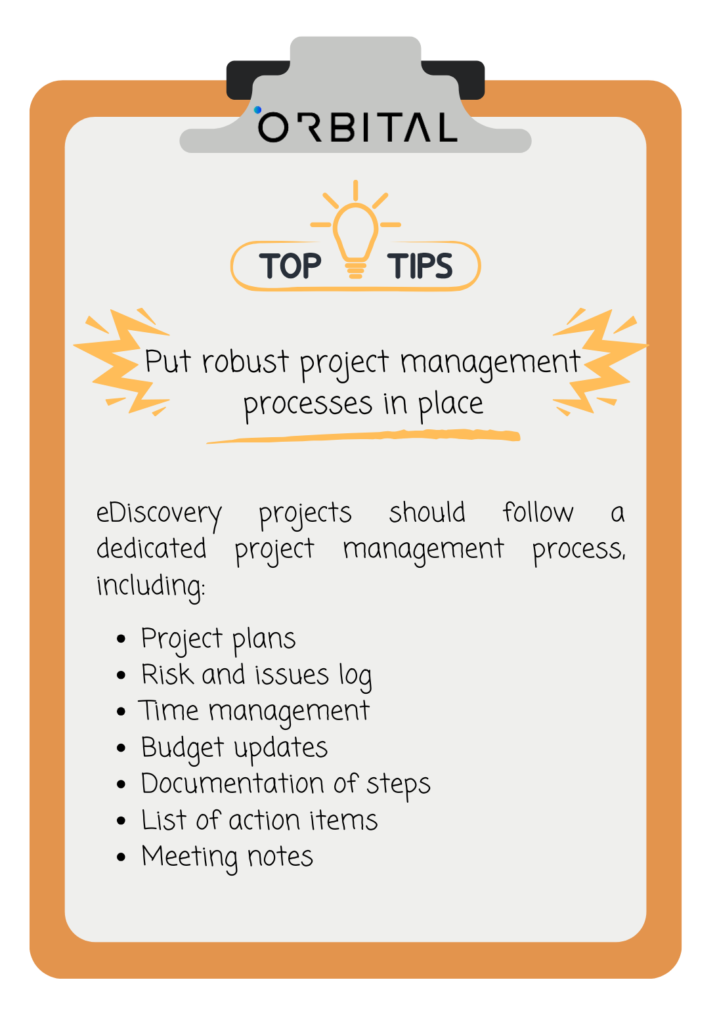

eDiscovery is based on the widely recognised framework, the EDRM (Electronic Discovery Reference Model). The process consists of nine stages:

1. Information Management

2. Identification

3. Preservation

4. Collection

5. Processing

6. Review

7. Analysis

8. Production

9. Presentation

For the purposes of this article, we will focus on points 2-7.

Identification & Preservation

The first step of any eDiscovery project is data identification. It is essential to know what data is needed for the project and where it is located. At this stage, questions around retention policies, legal hold, user polices, and use of devices will arise as it is important to understand the landscape of the data.

Once the data has been identified and preserved, it needs to be defensively preserved which is where digital forensic teams come into play.

Collection

Forensic collection ensures that data is collected in a defensible and verifiable manner, and that the integrity of the data is maintained throughout the collection process. If this process is not watertight, there could be a risk of failure and additional spiralling costs at a later stage. Not to mention there can be severe sanctions for spoliation of data. Specialised software and hardware are used to preserve the integrity of the data.

Processing

Once the data has been collected, the next stage is to process the data. Essentially, this step involves putting the data into usable format so that it can be reviewed and searched. It involves lots of machine time and can be amended according to the requirements of the project. Here are some of the standard activities which take place during processing:

– DeNisting – the removal of system files, program files, and other non-user created data

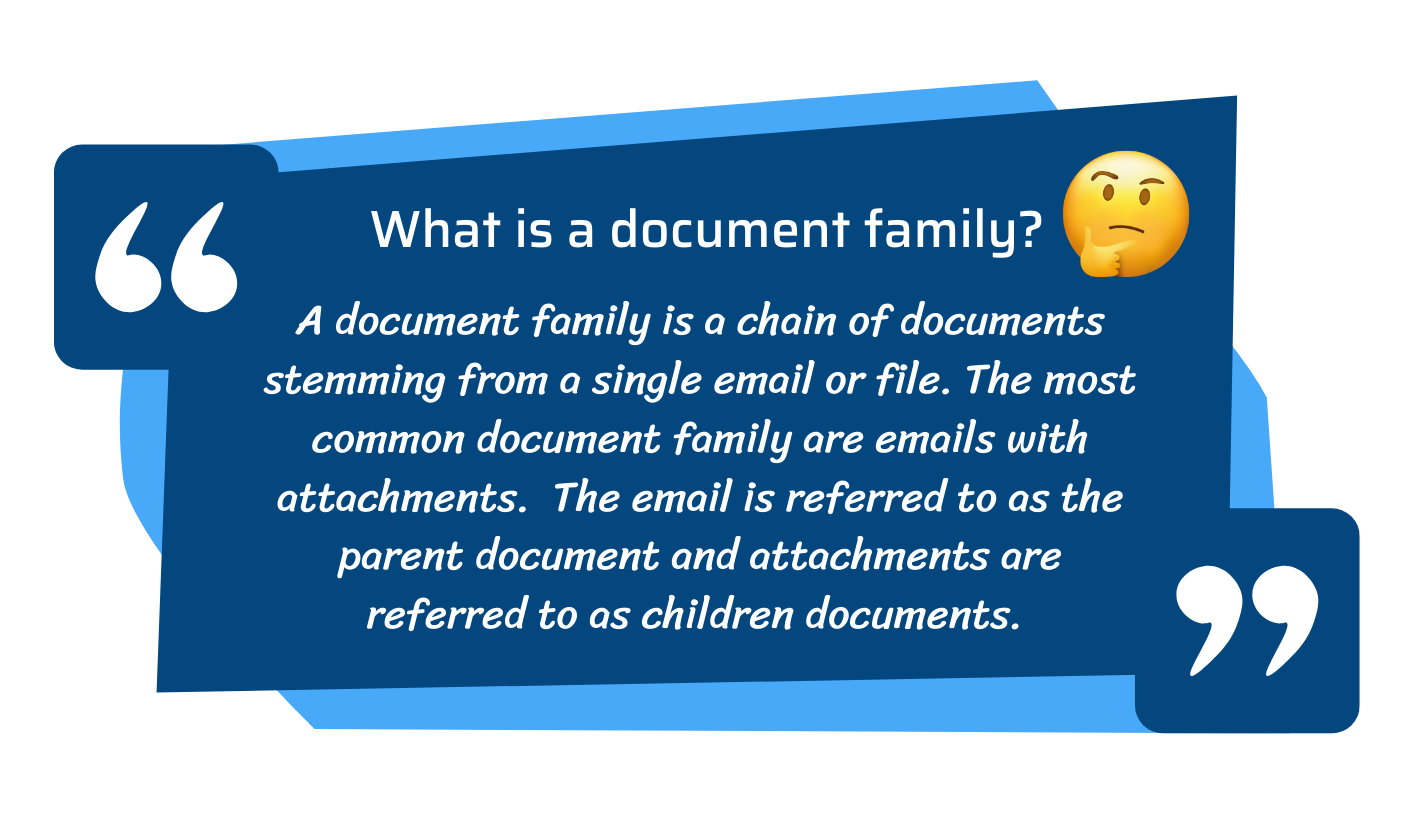

– Deduplication – the removal of identical document families

– Document extraction – this involves extracting attachments from emails, unzipping container files and sometimes extracting embedded objects so that each document is displayed individually in the review platform

– Metadata extraction – all the information about a document is extracted and put into separate fields, e.g. for emails the information such as, To, From, Subject, Body are captured in separate fields

– Text extraction – all the text from a document is extracted and put into a field so it can be easily searched

– OCR (Optical Character Recognition) – printed or handwritten text and images are converted into machine-readable text

– Numbering – each document is assigned a unique identifier so that it can be easily referenced. Document families are also assigned a unique identifier to ensure the relationship between the documents is preserved

It is important to perform sufficient quality control (QC) checks to ensure documents were processed correctly and display as expected in the review platform. At this stage, we usually encounter some processing errors which could be due to corrupt files, password protected files, unreadable file types etc. Discussions take place between the client and eDiscovery vendor to decide how to address these files. In most scenarios, the errored files are reprocessed to try to resolve the issue. If the issue cannot be resolved, the files are labelled “processing exceptions” and are not loaded to the review platform.

Review and Analysis

And now onto the fun stuff…

Once the data is loaded into the chosen review platform, we can start using all the bells and whistles to analyse, search and filter the data. We work closely with the client to understand what they are looking for within the data set and then we design workflows to ensure they are able to see the relevant documents as quickly as possible. Here, we can leverage a variety of tools, depending on the requirements of the project, such as:

– Near duplicate analysis

– Email threading

– Active learning

– Timeline analysis

– Image labelling

– Behavioural patterns

– Sentiment analysis

– Communication webs

– Thematic analysis

– And much more!

The main aim of the eDiscovery provider is to help the client find the relevant material as effectively and efficiently as possible. Most clients, understandably, want to spend as little time as possible reviewing documents – although manual review will always feature in an eDiscovery project, especially as it helps to refine the AI technology.

(A future article will dive deeper into these tools and how to leverage them during an eDiscovery project)

Conclusion

In summary, although AI is undeniably establishing its presence in eDiscovery, it’s crucial not to neglect the fundamentals, as mishandling eDiscovery can result in substantial financial and reputational consequences. Just as one wouldn’t invest in a house lacking a strong foundation, exercise caution when selecting eDiscovery services, ensuring that the chosen company employs a solid workflow and possesses a deep grasp of the essential.